Every enterprise is racing to deploy Agentic AI to transform customer experience. Agentic AI has the promise of unifying omnichannel experiences, creating personalized experiences that feel like walking into a neighborhood coffee shop where the barista knows you.

However, to reach the promised land, enterprises will have to arm the agents with the proper context about each customer, helping the agent understand where each customer is in their journey, and being able to offer helpful suggestions and relevant offers, along with timely triggers that orchestrate a multi-channel (and multi-session) conversation.

The Promise: Customer-Facing Omnichannel Agents

We are witnessing a paradigm shift in how brands interact with their customers. The era of static chatbots is ending, replaced by Customer-Facing Omnichannel Agents. Every enterprise is excited about the potential for Agentic AI not just to answer questions, but to act—to resolve support tickets, recommend products, and guide customers through complex sales cycles.

These agents promise to break down the traditional silos between support, sales, and marketing. Imagine a world where a customer can start a conversation on WhatsApp, continue it on a web call, and finish it via SMS, with the AI agent maintaining a single, unified conversation thread. This is the vision: a seamless, consistent, and intelligent experience that meets the customer exactly where they are.

The Context Challenge

However, realizing this potential is proving to be more complex than anticipated. The core problem is that while Large Language Models (LLMs) are incredibly smart, they are inherently stateless. They don’t have a long-term memory of the customer beyond the current session.

When an agent enters a conversation without knowing who the customer is, what they bought yesterday, or that they just had a frustrating service interaction, the experience falls flat. In fact, a recent Salesforce study starkly highlighted this gap: generic LLM agents without enterprise context succeed only 35% of the time on complex, multi-turn tasks.

The obvious solution is to feed the agent “context.” But as any data engineer knows, this is easier said than done. Customer context lives in a fragmented mess of applications—the CRM, the data warehouse, the helpdesk, the e-commerce platform, and so on. Dumping all this raw data into an agent’s context window is not only expensive but ineffective. As the engineering team at Anthropic explains, “Context Engineering” is the art of optimizing the information fed to an LLM. The context must be a holistic state—curated, relevant, and optimized against the model’s constraints to achieve the desired outcome consistently.

The Missing Piece: Agent Context Engine (ACE)

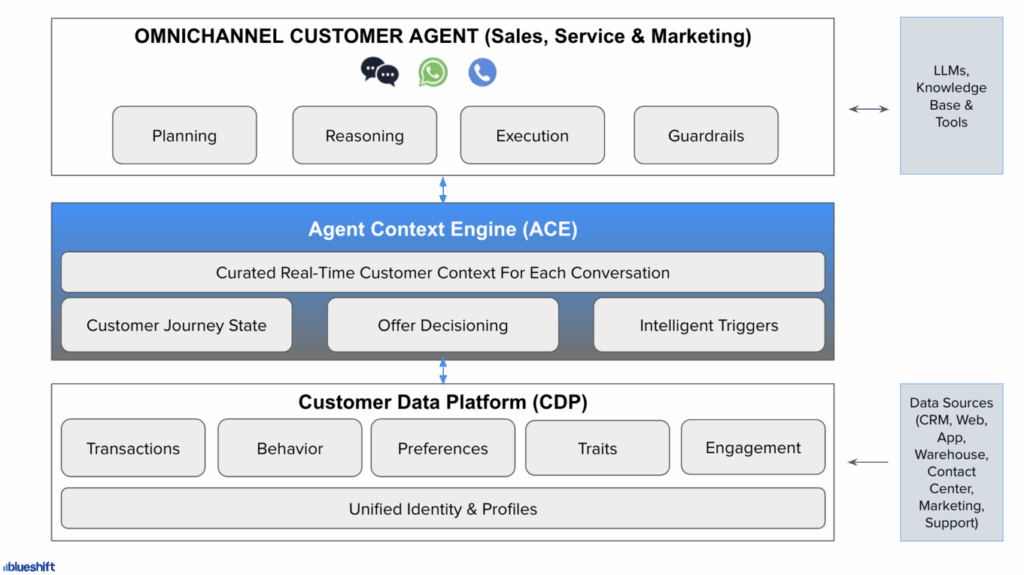

To bridge this gap, we need a dedicated layer in the tech stack. I call this the Agent Context Engine (ACE). ACE could be the “ace in the hole” for customer-facing agents.

ACE is not just a database; it is an intelligent engine that sits between your raw data and your AI agents. Its job is to provide agents with a holistic, curated, and real-time view of the customer.

ACE empowers agents with three critical capabilities:

- Customer Journey State: For an agent to seamlessly wear multiple hats (service, sales, marketing), it must understand exactly where the customer is in their journey. Is this a loyal VIP who is at risk of churning? A potential new buyer evaluating a purchase? ACE synthesizes millions of interaction signals into a clear “state” that the agent can instantly understand and act upon.

- AI Decisioning: Knowing who the customer is isn’t enough; the agent needs to know what to do. ACE provides a “brain” for the agent, using predictive models to determine the next-best offer or action. This ensures that the agent’s actions are not just reactive but proactively optimized for both customer satisfaction and business outcomes.

- Intelligent Triggers & Orchestration: ACE remembers that some conversations don’t end in a single session, and that customers might ask the agent to message them back later to pick up the thread. ACE remembers the right time, context, and channel to trigger the follow-up sessions that continue the same conversation.

ACE can be architected as a specialized agent itself, or as a robust tool available to other agents via emerging protocols like MCP (Model Context Protocol) for connecting to data, and A2A (Agent-to-Agent) for collaborating with other bots.

The Customer Data Platform (CDP) Powers ACE

It is important to recognize that ACE is not a standalone island; it is an evolution of the Customer Data Platform (CDP). While traditional CDPs focus on the difficult work of unifying data and resolving identity, the ACE layer builds on that foundation to enable intelligent conversations.

Enterprises embarking on this journey must look for a CDP that offers ACE functionality—or “graduate” their current stack to include it. Without the solid data foundation of a CDP, ACE has no fuel. But without ACE’s intelligence, the CDP is just a passive archive. Together, they unlock the true potential of AI.

Agents are poised to become the new frontend for brands, likely becoming the primary mode of interaction for the next generation of consumers. But an agent is only as good as the context it holds. The Agent Context Engine is the key enabler that will separate the frustration of “generic” bots from the delight of true intelligent assistants. Brands that invest in ACE today are positioning themselves to unlock an unprecedented level of customer-centricity, delivering experiences that are not just automated but truly personal.