Customer LLMs: Adapting Large Language Models (LLMs) for Customer Engagement with Interaction Data

In the age of AI-driven personalization, large language models (LLMs) are transforming how businesses engage with customers. By harnessing the power of interaction data, companies can create more tailored, meaningful experiences that resonate with their audience. This blog will explore how adapting LLMs using customer behavior insights can elevate engagement, boost satisfaction, and drive growth. Discover the future of customer interactions powered by advanced AI.

What are Large Language Models?

Large Language Models (LLMs) have revolutionized the field of natural language processing by enabling advanced text understanding and generation. Typically based on neural network architectures, these models can process and generate human-like text by understanding context and semantics. The three primary architectures used in LLMs are encoders, decoders, and encoder-decoder models.

Encoder-only models like BERT (Bidirectional Encoder Representations from Transformers) are designed to understand and process input data. They are commonly used for tasks such as sentiment analysis, named entity recognition, and text classification, where the focus is on representing information from the input text.

Decoder-only models such as GPTs (Generative Pre-trained Transformers) are primarily used for text generation. These models predict the next word in a sequence, making them ideal for tasks like chat, text completion, language modeling, and creative text generation.

Encoder-decoder models like T5 (Text-to-Text Transfer Transformer) and BART (Bidirectional and Auto-Regressive Transformers) combine the strengths of both encoders and decoders. They are used for tasks that require understanding and generating text, such as machine translation, text summarization, and question-answering systems.

Interaction Graphs

To leverage LLMs for customer engagement, we first need to represent customer interaction data effectively. An efficient way to do this is by using interaction graphs. In an interaction graph, nodes represent various entities such as customer profiles, catalogs of content or items, campaigns, templates, channels, and clickstream events from websites or mobile apps. Edges between these nodes denote interactions and relationships, such as a customer clicking on an item, receiving a campaign, or a user interacting with a channel.

By structuring interaction data as a directed graph, we can capture the complex and interconnected nature of customer behaviors and interactions, providing a rich dataset for training LLMs.

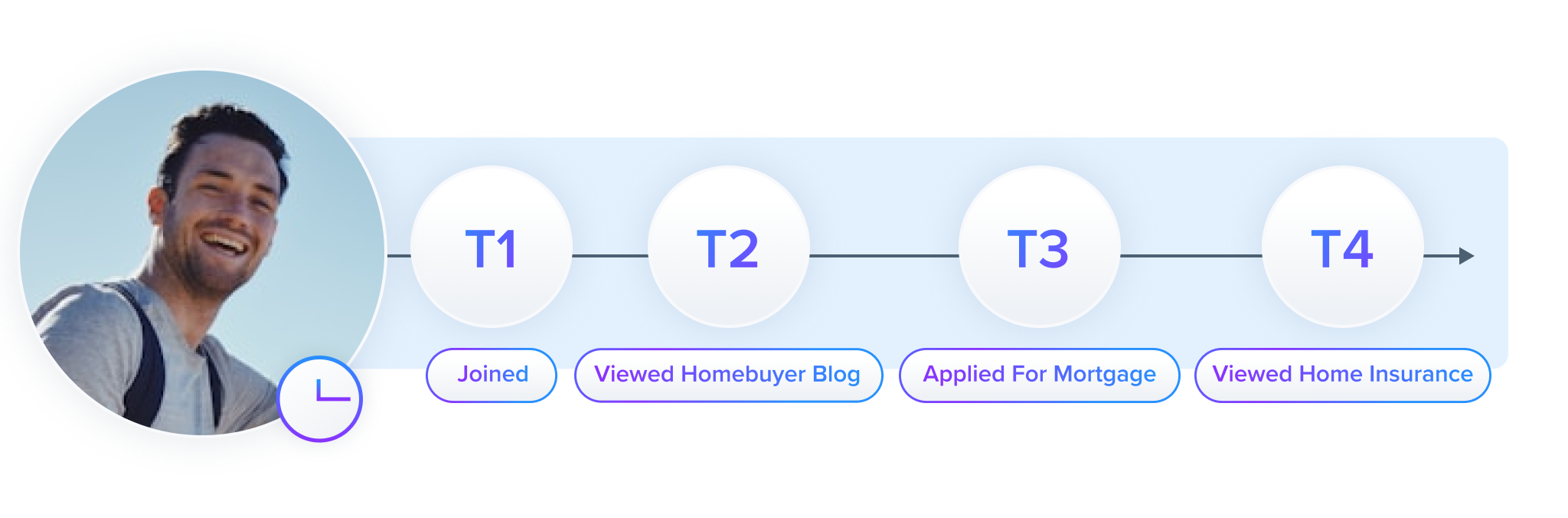

Encoding Interaction Graph Data

Once we have our interaction graph, the next step is to encode this data into a format suitable for LLMs. This involves transforming the graph into a temporal sequence of denormalized event data. Timeline-ordered edges of customer interactions can be represented as a sequence of events, capturing the order and context of each interaction. Tokenizers with special session markers can be used to convert the timelines into natural language sequences. Encoder-only transformers, such as BERT, can then encode these sequences. By processing each session, these models can generate customer and entity embeddings that capture the essence of customer interactions and their relationships with other entities. Such embeddings provide a compact, rich representation of customer behavior, which can then be leveraged for various downstream tasks.

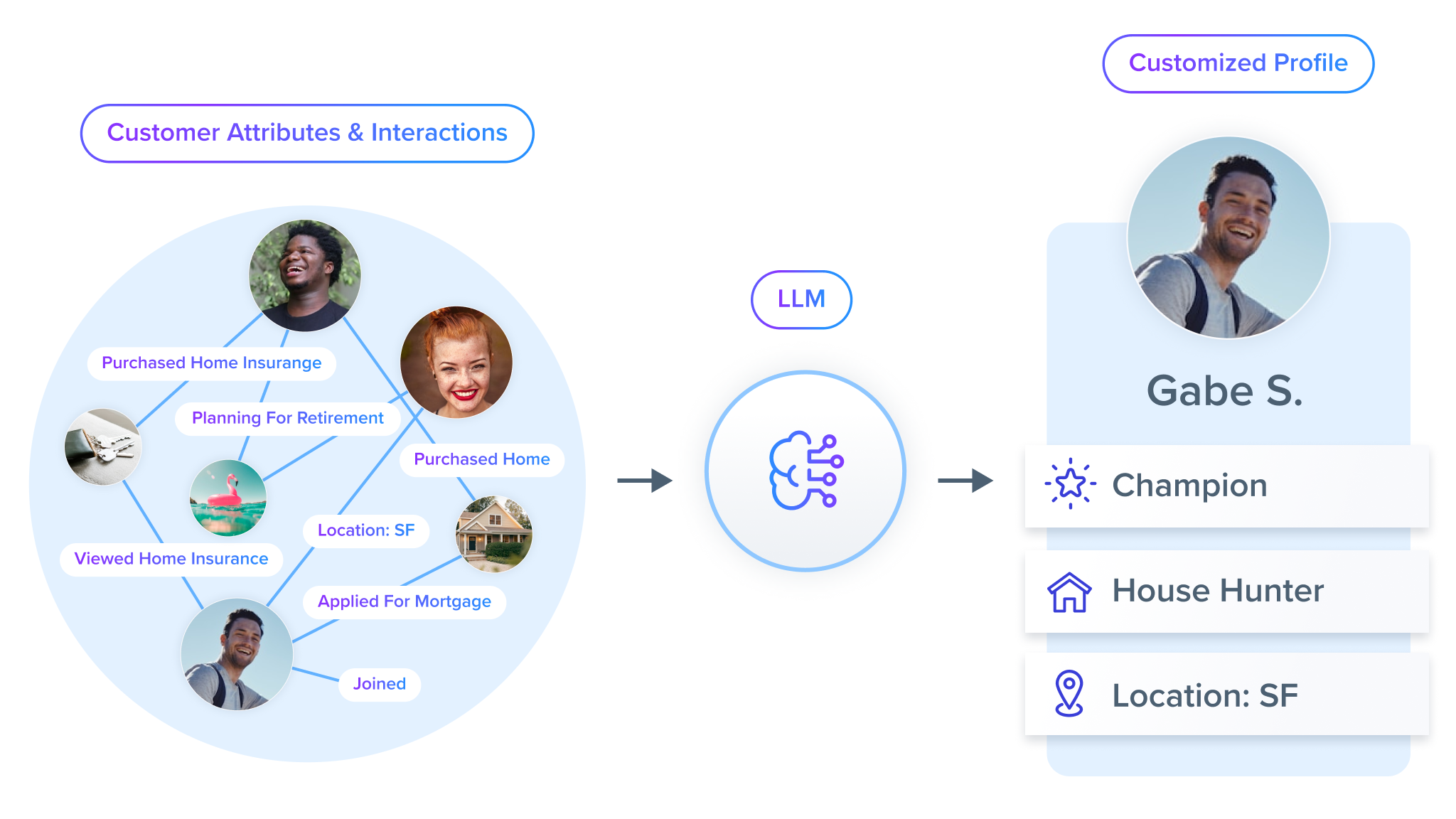

Applying LLMs to Customer Engagement Problems

With customer and catalog encodings derived from the interaction graph, we can apply LLM-inspired transformers to address common customer engagement challenges. Here are a few sample use cases:

- Catalog Predictions: By analyzing a sequence of customer events, we can predict which catalog items a customer will likely be interested in. The encoder-decoder model can generate recommendations based on the encoded customer preferences and past interactions. In a streaming media context, it might be the next shows or genres the customer is likely to be interested in, or in a retail context, it can be seasonal predictions and the next best items to recommend to the customer.

- Campaign and template personalization: Using prior engagement data, we can determine which campaigns and templates that are most relevant to a customer. This can be framed as a supervised learning task where the model learns to match customer profiles with new campaign elements based on historical data. For example, upcoming back-to-school campaigns in a retail context can be further personalized based on the customer visual and tone preferences and 1st party CRM data.

- Channel and time optimization: Understanding the temporal patterns in customer interactions allows us to predict the best channels and times for engagement. The model can optimize outreach strategies by incorporating seasonal and temporal embeddings to maximize customer response rates.

- Behavior predictions: Encoder-decoder models can also predict future app or site behaviors by analyzing past interactions. This helps personalize user experiences and anticipate customer activation, repeat transactions, or churn probabilities.

Offer redemption predictions: By analyzing sequences of interactions, the encoder-decoder model can predict which offers a customer is likely to redeem. This enables targeted marketing and increases the effectiveness of promotional campaigns.

Cost Implications of Training CustomerLLMs

Training LLMs on tens of millions of user session data is computationally intensive. The cost involves the hardware and cloud resources required for training and the time and expertise needed to fine-tune and optimize these models. Inference costs can be amortized over large pools of customers using look-alike modeling on embeddings. The benefits of improved customer engagement and personalized marketing can outweigh these costs by driving higher conversion rates and customer satisfaction if designed carefully.

Key Takeaway

To summarize, using LLMs to encode customer data and combining encoder and decoder architectures opens up new possibilities for personalized marketing and customer engagement. The rich representations generated by these models enable accurate predictions and targeted strategies, enhancing the overall customer experience.

By leveraging the power of LLMs, businesses can stay ahead in the competitive landscape, delivering personalized experiences that resonate with customers and drive long-term loyalty and revenue.